toy project - find_waldo (윌리를 찾아라) 2022.12.11

1. 목표

CNN-encoder decoder 를 사용하여 윌리를 찾아보기

데이터셋은 케글(https://www.kaggle.com/code/kerneler/starter-find-waldo-36151367-f/data)에서 가져왔다.

2. 구현

- 모듈

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.patches as patches

import keras.layers as layers

import keras.optimizers as optimizers

from keras.models import Model, load_model

from keras.utils import to_categorical

from keras.callbacks import LambdaCallback, ModelCheckpoint, ReduceLROnPlateau

import tensorflow as tf

import seaborn as sns

from PIL import Image

from skimage.transform import resize

import threading, random, os- tensorflow 버전과 GPU 확인

gpus = tf.config.experimental.list_logical_devices('GPU')

print('>>> Tensorflow Version: {}'.format(tf.__version__))

print('>>> Load GPUS: {}'.format(gpus))- DATASET load

DATA_DIR = os.getcwd()

DATASET_DIR = os.path.join(DATA_DIR, 'datasets')imgs = np.load(os.path.join(DATASET_DIR, 'imgs_uint8.npy'), allow_pickle=True).astype(np.float32) / 255.

labels = np.load(os.path.join(DATASET_DIR, 'labels_uint8.npy'), allow_pickle=True).astype(np.float32) / 255.

waldo_sub_imgs = np.load(os.path.join(DATASET_DIR, 'waldo_sub_imgs_uint8.npy'), allow_pickle=True) / 255.

waldo_sub_labels = np.load(os.path.join(DATASET_DIR, 'waldo_sub_labels_uint8.npy'), allow_pickle=True) / 255.numpy로 이루어진 데이터를 이미지로 로드한다. 로드한 이미지를 255로 나누어서 normalize 과정을 거친다.

- DATA Generate

데이터를 랜덤으로 크롭하고 플립하여 데이터를 재가공한다.

PANNEL_SIZE = 224

class BatchIndices(object):

def __init__(self, n, bs, shuffle=False):

self.n,self.bs,self.shuffle = n,bs,shuffle

self.lock = threading.Lock()

self.reset()

def reset(self):

self.idxs = (np.random.permutation(self.n)

if self.shuffle else np.arange(0, self.n))

self.curr = 0

def __next__(self):

with self.lock:

if self.curr >= self.n: self.reset()

ni = min(self.bs, self.n-self.curr)

res = self.idxs[self.curr:self.curr+ni]

self.curr += ni

return res랜덤으로 셔플

class segm_generator(object):

def __init__(self, x, y, bs=64, out_sz=(224,224), train=True, waldo=True):

self.x, self.y, self.bs, self.train = x,y,bs,train

self.waldo = waldo

self.n = x.shape[0]

self.ri, self.ci = [], []

for i in range(self.n):

ri, ci, _ = x[i].shape

self.ri.append(ri), self.ci.append(ci)

self.idx_gen = BatchIndices(self.n, bs, train)

self.ro, self.co = out_sz

self.ych = self.y.shape[-1] if len(y.shape)==4 else 1

def get_slice(self, i,o):

start = random.randint(0, i-o) if self.train else (i-o)

return slice(start, start+o)

def get_item(self, idx):

slice_r = self.get_slice(self.ri[idx], self.ro)

slice_c = self.get_slice(self.ci[idx], self.co)

x = self.x[idx][slice_r, slice_c]

y = self.y[idx][slice_r, slice_c]

if self.train and (random.random()>0.5):

y = y[:,::-1]

x = x[:,::-1]

if not self.waldo and np.sum(y)!=0:

return None

return x, to_categorical(y, num_classes=2).reshape((y.shape[0] * y.shape[1], 2))

def __next__(self):

idxs = self.idx_gen.__next__()

items = []

for idx in idxs:

item = self.get_item(idx)

if item is not None:

items.append(item)

if not items:

return None

xs,ys = zip(*tuple(items))

return np.stack(xs), np.stack(ys)

랜덤으로 크롭

def seg_gen_mix(x1, y1, x2, y2, tot_bs=4, prop=0.34, out_sz=(224,224), train=True):

"""

Mixes generator output. The second generator is set to skip images that contain any positive targets.

# Arguments

x1, y1: input/targets for waldo sub-images

x2, y2: input/targets for whole images

tot_bs: total batch size

prop: proportion of total batch size consisting of first generator output

"""

n1 = int(tot_bs*prop)

n2 = tot_bs - n1

sg1 = segm_generator(x1, y1, n1, out_sz = out_sz ,train=train)

sg2 = segm_generator(x2, y2, n2, out_sz = out_sz ,train=train, waldo=False)

while True:

out1 = sg1.__next__()

out2 = sg2.__next__()

if out2 is None:

yield out1

else:

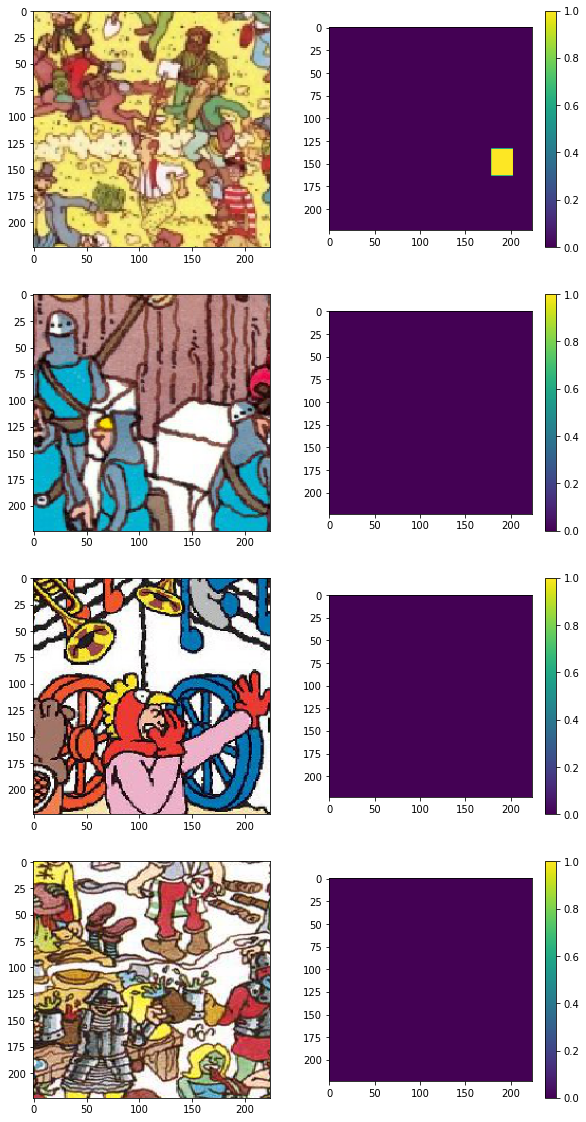

yield np.concatenate((out1[0], out2[0])), np.concatenate((out1[1], out2[1]))- Sample Image 출력

gen_mix = seg_gen_mix(waldo_sub_imgs, waldo_sub_labels, imgs, labels, tot_bs=4, prop=0.34, out_sz=(PANNEL_SIZE, PANNEL_SIZE))

X, y = next(gen_mix)

plt.figure(figsize=(10, 20))

for i, img in enumerate(X):

plt.subplot(X.shape[0], 2, 2*i+1)

plt.imshow(X[i])

plt.subplot(X.shape[0], 2, 2*i+2)

plt.colorbar()

plt.imshow(y[i][:,1].reshape((PANNEL_SIZE, PANNEL_SIZE)))

크롭된 이미지에 윌리가 없는 이미지가 윌리가 있는 이미지보다 훨씬 많기에 (Too many 0 value) Class weight를 skewed를 잡아 학습의 불균형을 잡아준다. (https://keras.io/models/sequential/ )

freq0 = np.sum(labels==0)

freq1 = np.sum(labels==1)

print(freq0, freq1)

sns.distplot(labels.flatten(), kde=False, hist_kws={'log':True})

- Class weight 만들기

sample_weights = np.zeros((6, PANNEL_SIZE * PANNEL_SIZE, 2))

sample_weights[:,:,0] = 1. / freq0

sample_weights[:,:,1] = 1.

plt.subplot(1,2,1)

plt.imshow(sample_weights[0,:,0].reshape((224, 224)))

plt.colorbar()

plt.subplot(1,2,2)

plt.imshow(sample_weights[0,:,1].reshape((224, 224)))

plt.colorbar()

-모델 만들기

def build_model():

inputs = layers.Input(shape=(PANNEL_SIZE, PANNEL_SIZE, 3))

net = layers.Conv2D(64, kernel_size=3, padding='same')(inputs)

net = layers.LeakyReLU()(net)

net = layers.MaxPool2D(pool_size=2)(net)

shortcut_1 = net

net = layers.Conv2D(128, kernel_size=3, padding='same')(net)

net = layers.LeakyReLU()(net)

net = layers.MaxPool2D(pool_size=2)(net)

shortcut_2 = net

net = layers.Conv2D(256, kernel_size=3, padding='same')(net)

net = layers.LeakyReLU()(net)

net = layers.MaxPool2D(pool_size=2)(net)

shortcut_3 = net

net = layers.Conv2D(256, kernel_size=1, padding='same')(net)

net = layers.LeakyReLU()(net)

net = layers.MaxPool2D(pool_size=2)(net)

net = layers.UpSampling2D(size=2)(net)

net = layers.Conv2D(256, kernel_size=3, padding='same')(net)

net = layers.Activation('relu')(net)

net = layers.Add()([net, shortcut_3])

net = layers.UpSampling2D(size=2)(net)

net = layers.Conv2D(128, kernel_size=3, padding='same')(net)

net = layers.Activation('relu')(net)

net = layers.Add()([net, shortcut_2])

net = layers.UpSampling2D(size=2)(net)

net = layers.Conv2D(64, kernel_size=3, padding='same')(net)

net = layers.Activation('relu')(net)

net = layers.Add()([net, shortcut_1])

net = layers.UpSampling2D(size=2)(net)

net = layers.Conv2D(2, kernel_size=1, padding='same')(net)

net = layers.Reshape((-1, 2))(net)

net = layers.Activation('softmax')(net)

model = Model(inputs=inputs, outputs=net)

model.compile(

loss='categorical_crossentropy',

optimizer=optimizers.Adam(),

metrics=['acc'],

sample_weight_mode='temporal'

)

return model__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_5 (InputLayer) (None, 224, 224, 3) 0

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 224, 224, 64) 1792 input_5[0][0]

__________________________________________________________________________________________________

leaky_re_lu_9 (LeakyReLU) (None, 224, 224, 64) 0 conv2d_33[0][0]

__________________________________________________________________________________________________

max_pooling2d_17 (MaxPooling2D) (None, 112, 112, 64) 0 leaky_re_lu_9[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 112, 112, 128 73856 max_pooling2d_17[0][0]

__________________________________________________________________________________________________

leaky_re_lu_10 (LeakyReLU) (None, 112, 112, 128 0 conv2d_34[0][0]

__________________________________________________________________________________________________

max_pooling2d_18 (MaxPooling2D) (None, 56, 56, 128) 0 leaky_re_lu_10[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 56, 56, 256) 295168 max_pooling2d_18[0][0]

__________________________________________________________________________________________________

leaky_re_lu_11 (LeakyReLU) (None, 56, 56, 256) 0 conv2d_35[0][0]

__________________________________________________________________________________________________

max_pooling2d_19 (MaxPooling2D) (None, 28, 28, 256) 0 leaky_re_lu_11[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 28, 28, 256) 65792 max_pooling2d_19[0][0]

__________________________________________________________________________________________________

leaky_re_lu_12 (LeakyReLU) (None, 28, 28, 256) 0 conv2d_36[0][0]

__________________________________________________________________________________________________

max_pooling2d_20 (MaxPooling2D) (None, 14, 14, 256) 0 leaky_re_lu_12[0][0]

__________________________________________________________________________________________________

up_sampling2d_17 (UpSampling2D) (None, 28, 28, 256) 0 max_pooling2d_20[0][0]

__________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 28, 28, 256) 590080 up_sampling2d_17[0][0]

__________________________________________________________________________________________________

activation_25 (Activation) (None, 28, 28, 256) 0 conv2d_37[0][0]

__________________________________________________________________________________________________

add_13 (Add) (None, 28, 28, 256) 0 activation_25[0][0]

max_pooling2d_19[0][0]

__________________________________________________________________________________________________

up_sampling2d_18 (UpSampling2D) (None, 56, 56, 256) 0 add_13[0][0]

__________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 56, 56, 128) 295040 up_sampling2d_18[0][0]

__________________________________________________________________________________________________

activation_26 (Activation) (None, 56, 56, 128) 0 conv2d_38[0][0]

__________________________________________________________________________________________________

add_14 (Add) (None, 56, 56, 128) 0 activation_26[0][0]

max_pooling2d_18[0][0]

__________________________________________________________________________________________________

up_sampling2d_19 (UpSampling2D) (None, 112, 112, 128 0 add_14[0][0]

__________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 112, 112, 64) 73792 up_sampling2d_19[0][0]

__________________________________________________________________________________________________

activation_27 (Activation) (None, 112, 112, 64) 0 conv2d_39[0][0]

__________________________________________________________________________________________________

add_15 (Add) (None, 112, 112, 64) 0 activation_27[0][0]

max_pooling2d_17[0][0]

__________________________________________________________________________________________________

up_sampling2d_20 (UpSampling2D) (None, 224, 224, 64) 0 add_15[0][0]

__________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 224, 224, 2) 130 up_sampling2d_20[0][0]

__________________________________________________________________________________________________

reshape_5 (Reshape) (None, 50176, 2) 0 conv2d_40[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 50176, 2) 0 reshape_5[0][0]

==================================================================================================

Total params: 1,395,650

Trainable params: 1,395,650

Non-trainable params: 0

__________________________________________________________________________________________________-학습

gen_mix = seg_gen_mix(waldo_sub_imgs, waldo_sub_labels, imgs, labels, tot_bs=6, prop=0.34, out_sz=(PANNEL_SIZE, PANNEL_SIZE))

def on_epoch_end(epoch, logs):

print('\r', 'Epoch:%5d - loss: %.4f - acc: %.4f' % (epoch, logs['loss'], logs['acc']), end='')

print_callback = LambdaCallback(on_epoch_end=on_epoch_end)

history = model.fit_generator(

gen_mix, steps_per_epoch=6, epochs=500,

class_weight=sample_weights,

verbose=0,

callbacks=[

print_callback,

ReduceLROnPlateau(monitor='loss', factor=0.2, patience=100, verbose=1, mode='auto', min_lr=1e-05)

]

)

model.save('model.h5')

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.title('loss')

plt.plot(history.history['loss'])

plt.subplot(1, 2, 2)

plt.title('accuracy')

plt.plot(history.history['acc'])Epoch: 499 - loss: 0.0043 - acc: 0.9984

-평가 후 결과를 오버레이

img_filename = '02.jpg'

test_img = np.array(Image.open(os.path.join('test_imgs', img_filename)).resize((2800, 1760), Image.NEAREST)).astype(np.float32) / 255.

plt.figure(figsize=(20, 10))

plt.imshow(test_img)

def bbox_from_mask(img):

rows = np.any(img, axis=1)

cols = np.any(img, axis=0)

y1, y2 = np.where(rows)[0][[0, -1]]

x1, x2 = np.where(cols)[0][[0, -1]]

return x1, y1, x2, y2

x1, y1, x2, y2 = bbox_from_mask((pred_out > 0.8).astype(np.uint8))

print(x1, y1, x2, y2)

# make overlay

overlay = np.repeat(np.expand_dims(np.zeros_like(pred_out, dtype=np.uint8), axis=-1), 3, axis=-1)

alpha = np.expand_dims(np.full_like(pred_out, 255, dtype=np.uint8), axis=-1)

overlay = np.concatenate([overlay, alpha], axis=-1)

overlay[y1:y2, x1:x2, 3] = 0

plt.figure(figsize=(20, 10))

plt.imshow(overlay)fig, ax = plt.subplots(figsize=(20, 10))

ax.imshow(test_img)

ax.imshow(overlay, alpha=0.5)

rect = patches.Rectangle((x1, y1), width=x2-x1, height=y2-y1, linewidth=1.5, edgecolor='r', facecolor='none')

ax.add_patch(rect)

ax.set_axis_off()

3. 결과

아주 간단하게 구현해낸 ML image classification 으로 사이즈가 큰 사진을 랜덤으로 크롭하고 배열하고 skewed한 데이터를 (윌리가 사진에 한명 뿐이니 비대칭이 아주 크다.) class_weight 설정으로 불균형 데이터에 가중치를 두어 잡았다.

4. 참고

https://github.com/kairess/find_waldo/blob/master/train.ipynb

GitHub - kairess/find_waldo: 인공지능의 월리를 찾아라!

인공지능의 월리를 찾아라! Contribute to kairess/find_waldo development by creating an account on GitHub.

github.com

https://www.kaggle.com/kairess/find-waldo

Find Waldo

www.kaggle.com